From November 2021 to Feb 2022, I had the opportunity to contribute to the audio for Project Lyra, Epic Games' Demo Project for Unreal Engine 5.0. This was my second foray into the world of Unreal, after spending five years working on the UE4 game #Solar Ash . My primary task was to explore Metasounds, the new visual graph for audio and DSP. I've found Blueprints (and now Metasounds) to generally be a lot of fun to use. Using node-based systems such as these makes prototyping very fast, as the distance between an idea and reality can be minimized significantly. In my time on the project, I built four reusable systems.

One of the caveats of working on an official demo project for Unreal is that you must avoid using commercially available sounds. The sound elements had to be entirely original or already owned by Epic Games. Following this guideline proved tricky but was an interesting aesthetic challenge, especially for music.

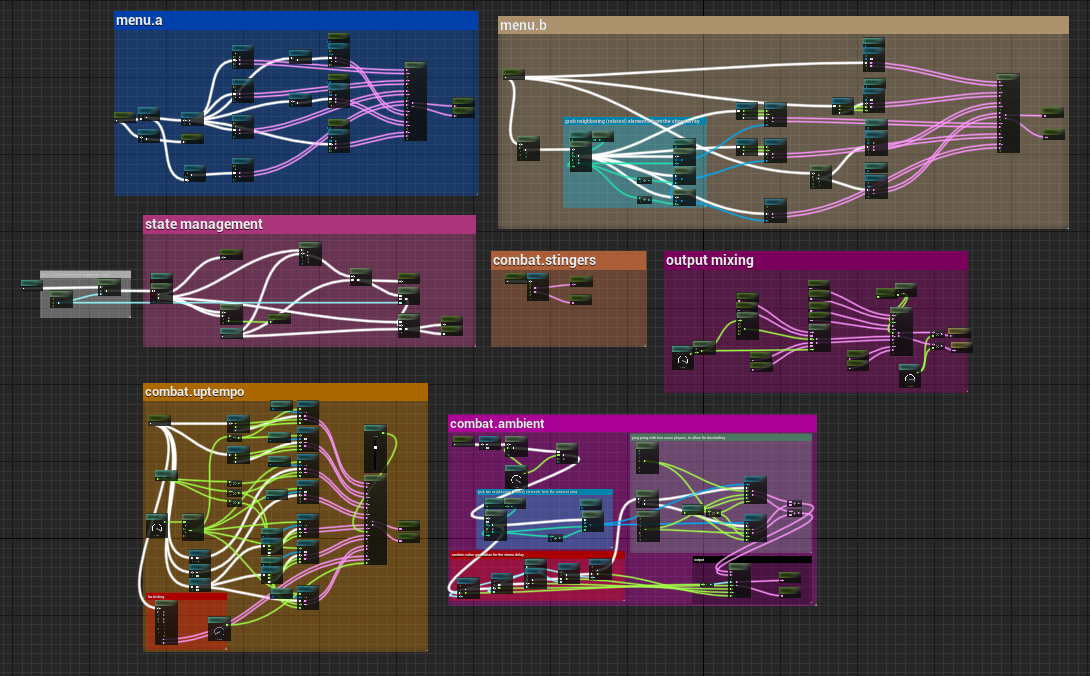

Music System

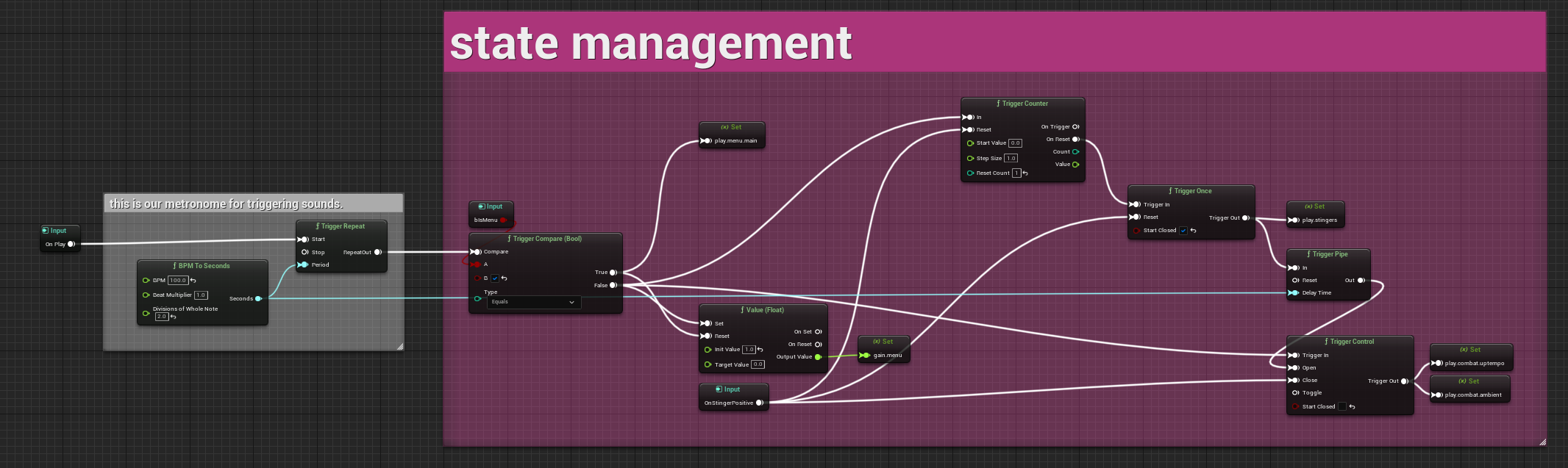

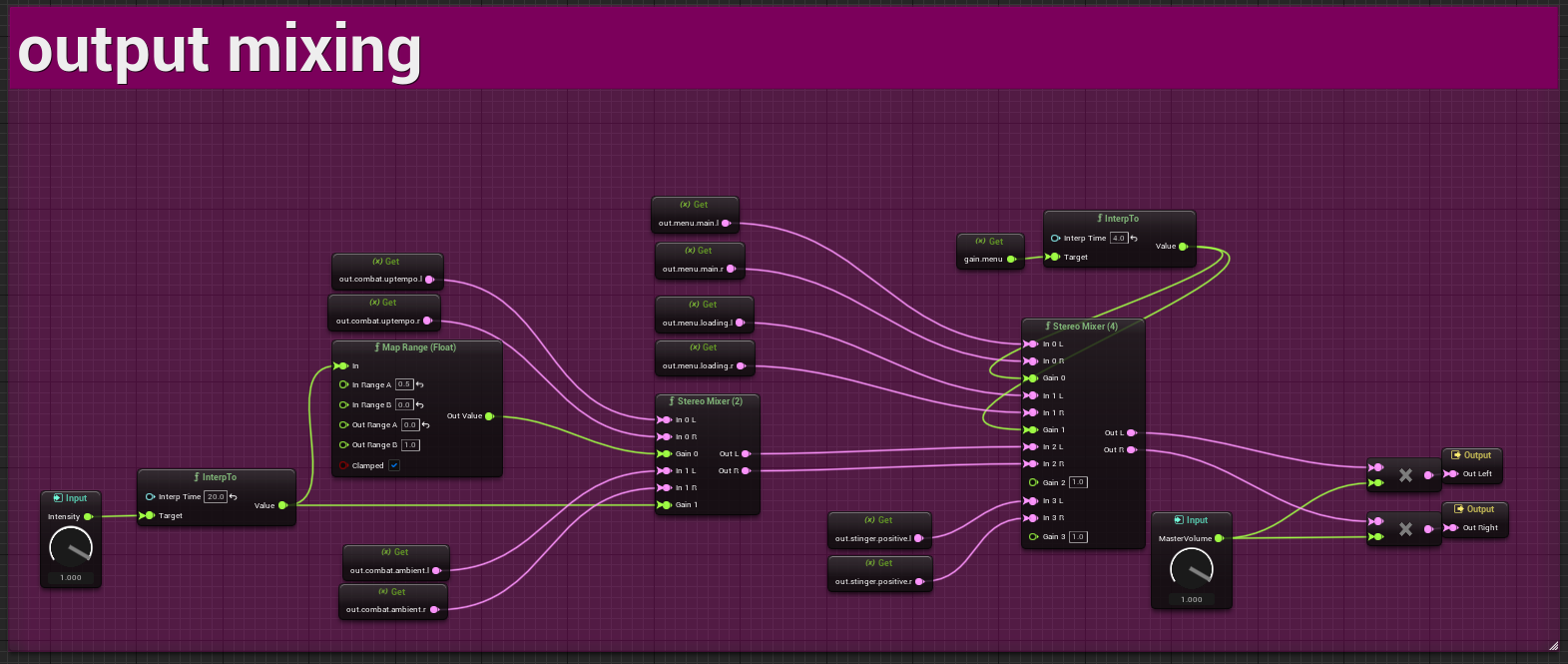

The music system uses well-trodden techniques like vertical layering, horizontal sequencing, dovetailing, crossfading, and probability to drive changes in music over time. The system uses a Metasound Source stored on the Game Instance, set to persist across level transitions. When loading into the main menu or a gameplay map, a component is spawned that manages the music system. This generally involves sending information to the system about how intense it should be, whether we're in a menu or not, etc.

The system has five general states: two for the menus and one each for uptempo combat music, ambient combat music, and stingers.

Menu Music

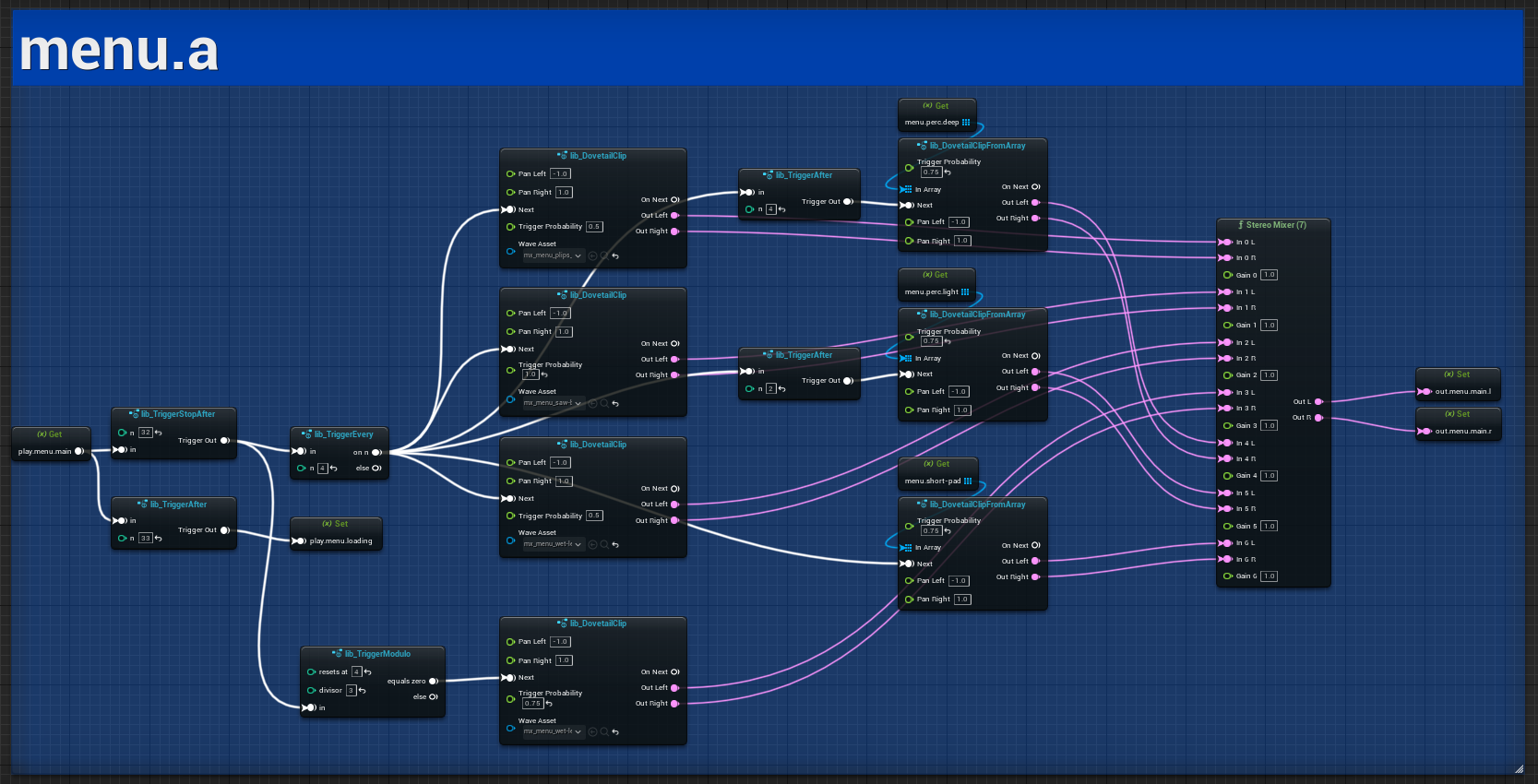

The music for the main menu has two separate subsystems, which represent the "A" and the "B" sections of the song form.

The "A" section is more thematic and uses count-based sequencing to give it a general structure. This section continues for 8 bars, waits for a beat, then transitions to the "B" section.

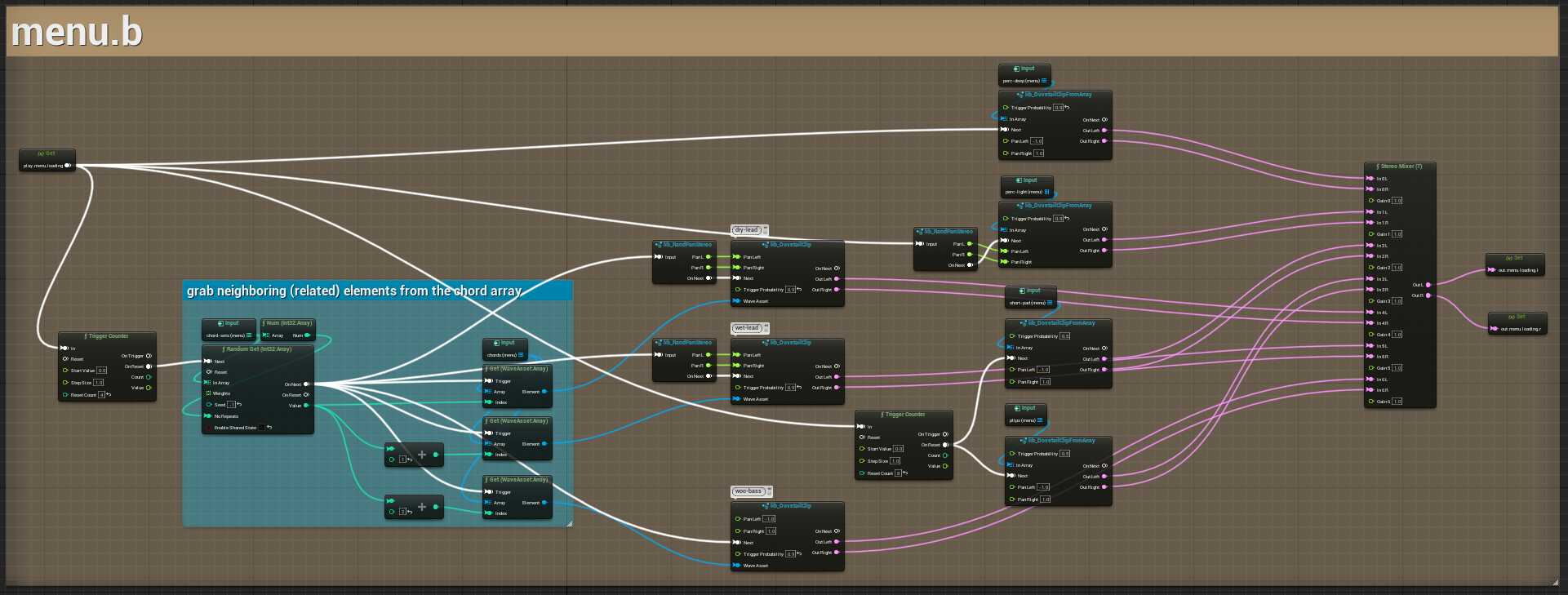

The "B" section has no time-based structure and relies entirely on trigger probability. This section is broken down into sound sets for five different chords, and a new chord is randomly selected for each bar. Some elements, such as percussion, are agnostic to these chord changes.

Combat Music

When entering gameplay, the music system switches to combat music. music is driven by a 0-1 value based on combat intensity. This value increases with gameplay factors such as the local player dying, firing their weapon, and whether or not they've taken damage. The value naturally decreases slowly over time so that when you're not involved in the action, you hear more music.

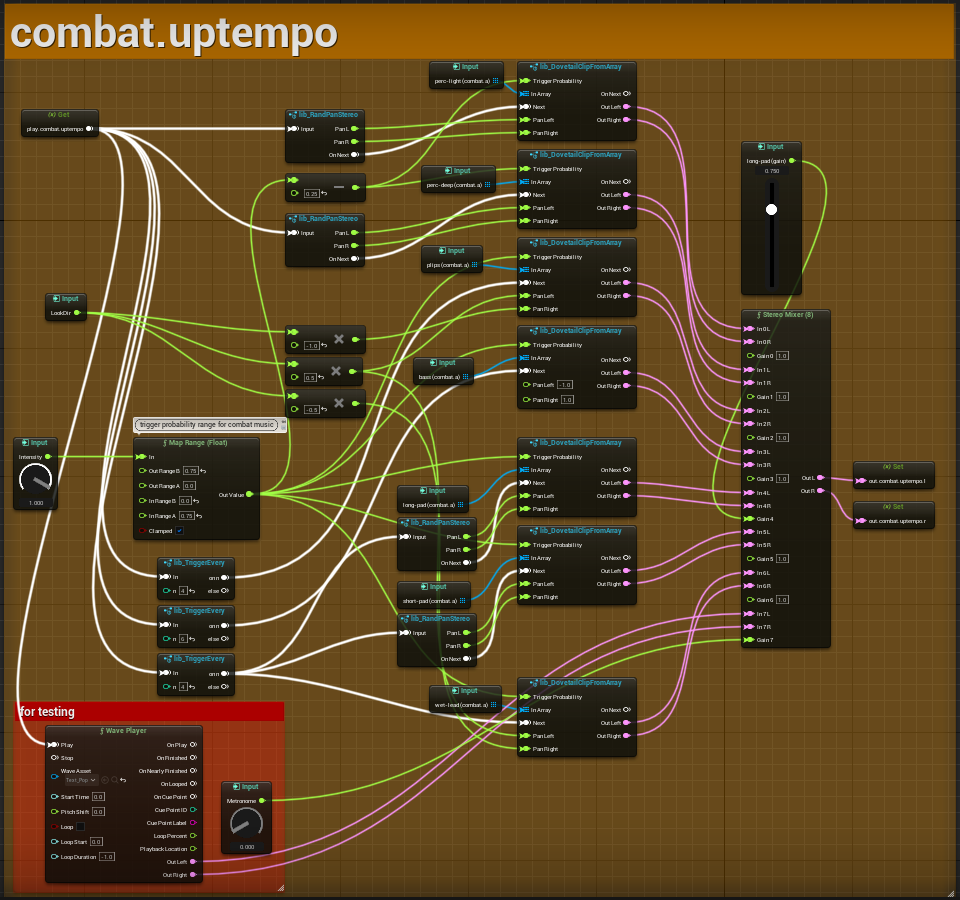

Uptempo Music

The uptempo music is sequenced primarily using probabilities for individual elements. The likelihood of these elements playing increases as the intensity decreases.

The lower the intensity parameter is, the more we hear uptempo elements like fast percussion and ostinati. The intensity parameter also drives a crossfade between the uptempo and ambient music assets. Initially, the music was designed to grow more active as the combat intensity increased; however, this created too much cacophony between the music and sound effects. Ultimately the system works best when the music gets out of the way when there is more weapon fire between players.

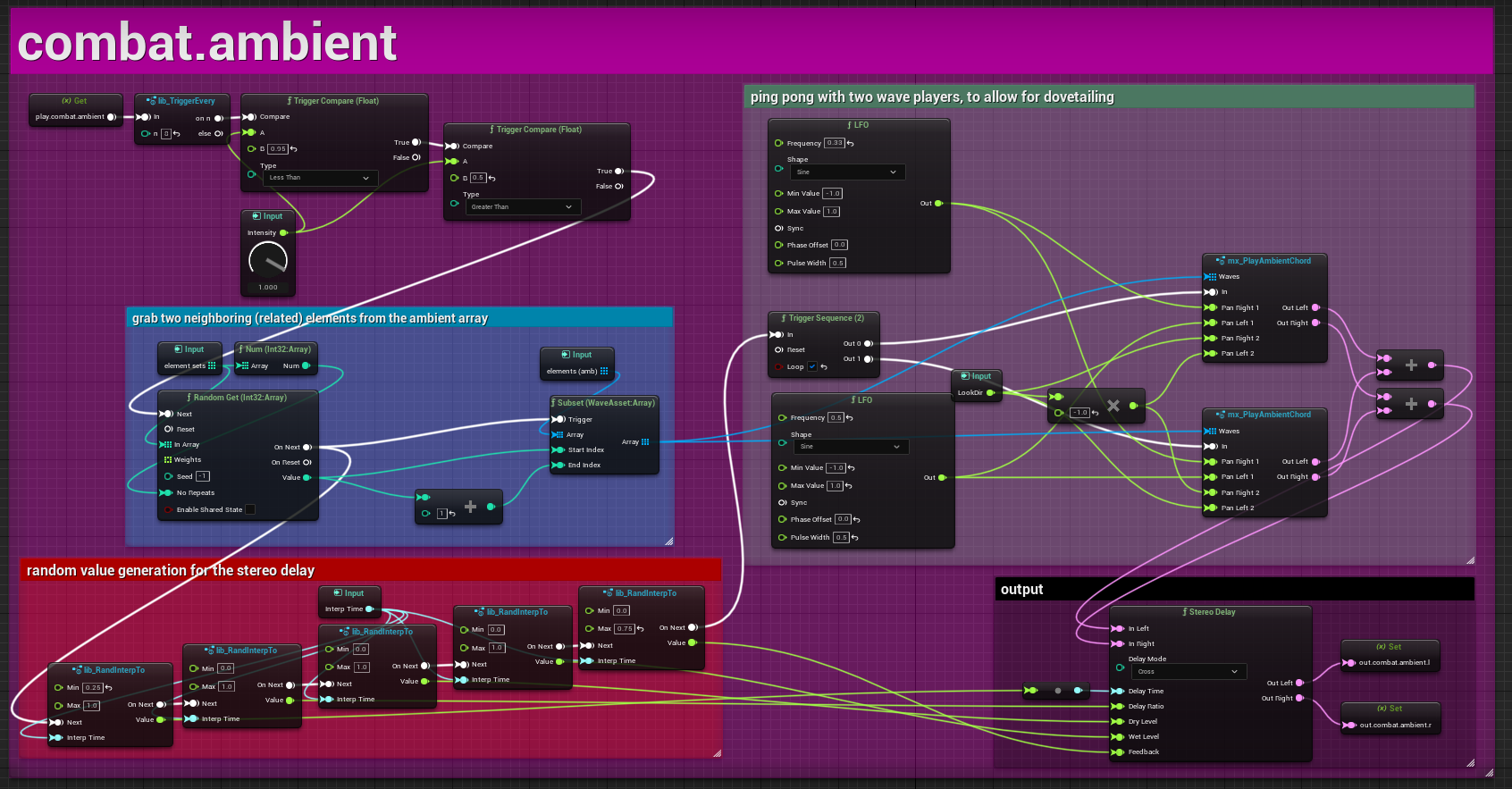

Ambient Music

The ambient music is made of two layers, a short pad and a long pad, going through a heavily modulated stereo delay. The volume and timing of these layers are randomly altered each time to create more interest and variation. The player's looking direction is also used to modulate the pan position of these sounds.

The ambient music during combat exists at a very particular threshold. The state is triggered when the intensity is high, but only between 50 to 90%. Because the intensity parameter continually decreases when nothing is happening, the ambient phrases serve as a nice buffer between high combat scenarios. For instance, if you're escaping an encounter to regroup and gather ammo, this is generally the type of moment that you might hear ambient music.

Stingers

In the context of this system, stingers are small segments of thematic, uptempo music triggered by Blueprints during positive events, such as capturing a control point or winning a round. The music returns to its normal behavior once the stinger is over. When a stinger is invoked, the combat intensity is also set to zero, which allows the uptempo music to play for a while afterward until you re-enter the action.

Takeaways

If I had to do it over again, I would do the music sequencing logic in a Blueprint system like Quartz. This way, Metasounds can focus on what it does best: audio manipulation. Using Quartz, you can send triggers to Metasounds to drive the music. Blueprints are better suited to managing stateful systems, whereas Metasounds' strength comes from handling audio directly at the source.

Early Reflections

In a third-person shooter, audio has a significant role to play in offering users strategic feedback about where other players are at any moment and what they might be doing. One of our stretch goals for Lyra was to build a reflection system to help with not only localizing weapon fire but also improving the feeling of firing your weapon in different rooms and environments. It's a subtle effect when done well but can add a lot of realism and energy to the sound of a game.

I built a dynamic reflection system for the game Solar Ash, and while it's a bit different from this, it did give me a place to start with my experimentation.

This system uses line trace data to calculate the delay, panning, and gain of a tap delay with 8 taps. There is one trace per tap, 8 in all. Every time the local player fires a weapon, the early reflection system updates the properties of the 8 taps in the tap delay Submix Effect. There is a dedicated Submix for the early reflection system, which all weapon fire sounds send themselves to. There is also a low pass filter on the Submix to darken sounds going into the tap delay. This helps alleviate phase issues when using short delay times.

Weapon Reflections

Two traces are made at a 45-degree angle (either way) from the player's firing direction. These are generally the first reflections to hit something and help create a different sonic signature for when you're standing up against a wall versus out in the open.

Projectile Reflections

If the projectile fired by the player's weapon hits a surface, there are (at least) four additional traces that are made.

There's a line of sight trace from the firing weapon, which is actually pre-calculated by the gameplay system. Then three additional traces are made from the location where the projectile hit the surface. There's a "mirror" trace that uses the surface normal (angle) of the impact to project another trace, and then two traces each at a 45-degree angle either way from the mirror trace.

Finally, three additional traces are created if the mirror trace hits a surface. Another mirror trace is projected from that location, in addition to two 45-degree angle traces on either side.

Data Interpretation

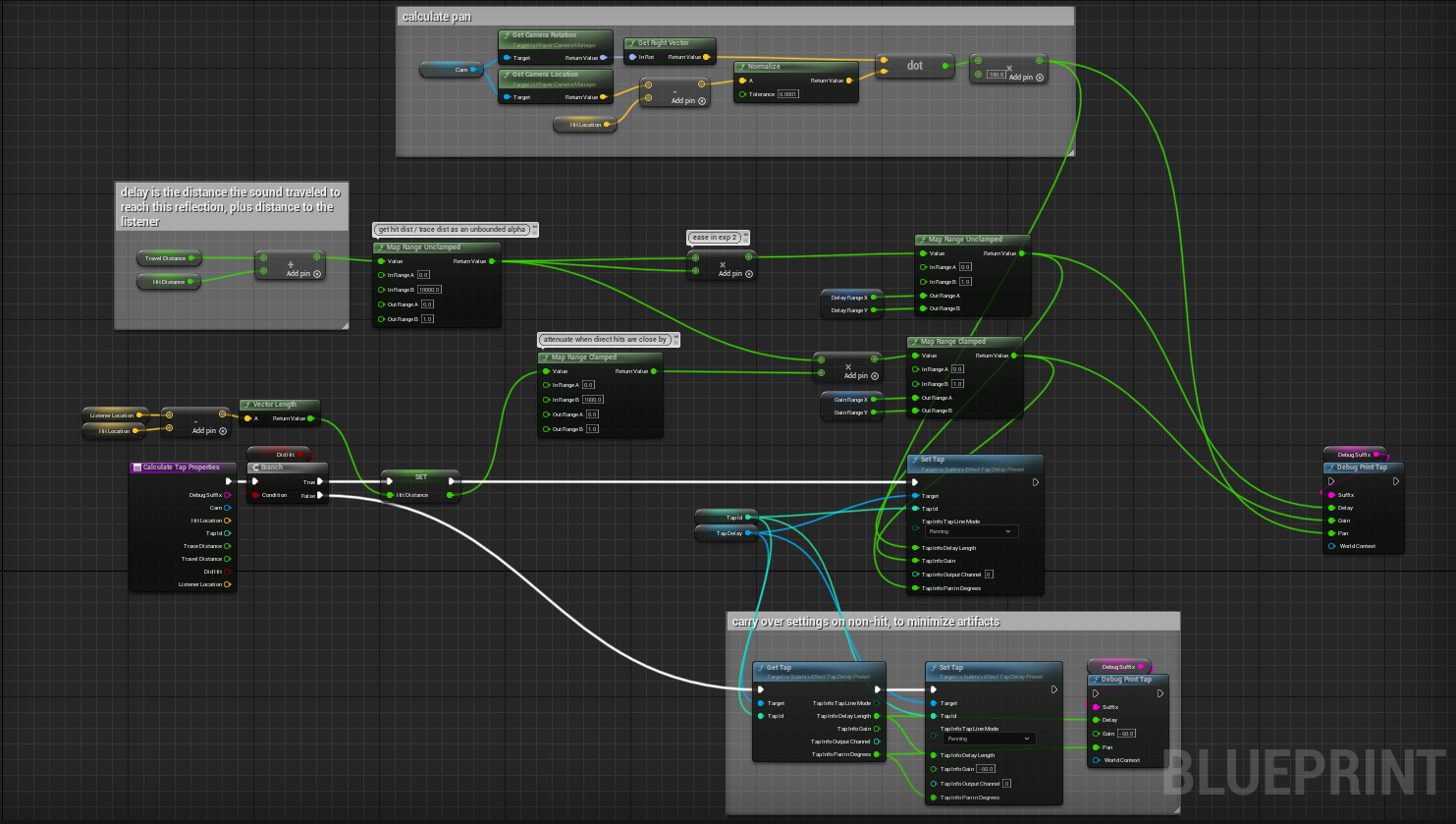

The tap delay is driven by data from the line traces. A trace's distance, position, and impact normal are used to calculate a tap's gain, pan, and delay time. Taps are set to -60dB (the quietest setting) when no surface is hit for the respective reflection.

Delay Time

The delay is calculated by the travel distance of the reflection. As an example, the travel distance of the mirror reflection would be the distance from the weapon to the line of sight impact, plus the distance from the line of sight impact to the surface that the mirror reflection hit. The distance of this location to the audio listener is then added. This is all done to approximate the effect of the speed of sound.

Gain

Similar to delay, travel distance is used to attenuate the gain level of each reflection. In addition, reflections within the first 1000 units are linearly attenuated, where a reflection at a distance of 0 units would be silent. In real life, there are lots of bad-sounding rooms. Sometimes when you try to mimic the laws of nature in games, you get bad-sounding results that also happen to be accurate. Reducing the volume of nearby reflections alleviates artifacts created by a system of this type.

Pan

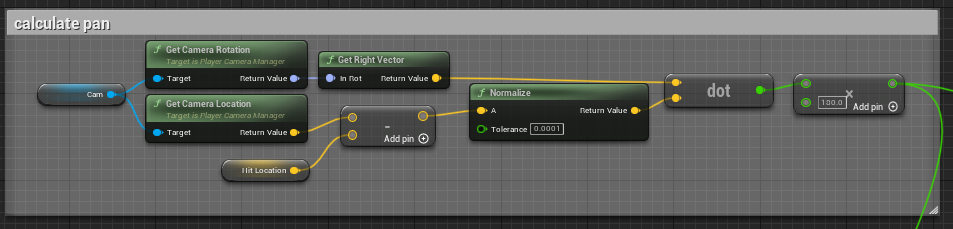

The panning of each tap is calculated by taking the dot product of two directions. These are the camera's right vector and the direction between the camera and the reflection locations.

Whiz-By System

When someone is shooting at you from off-screen, it's helpful to know where it's coming from and how close their fire is to you. A whiz-by system helps by sonifying the sound of bullets and other projectiles passing uncomfortably close to your player.

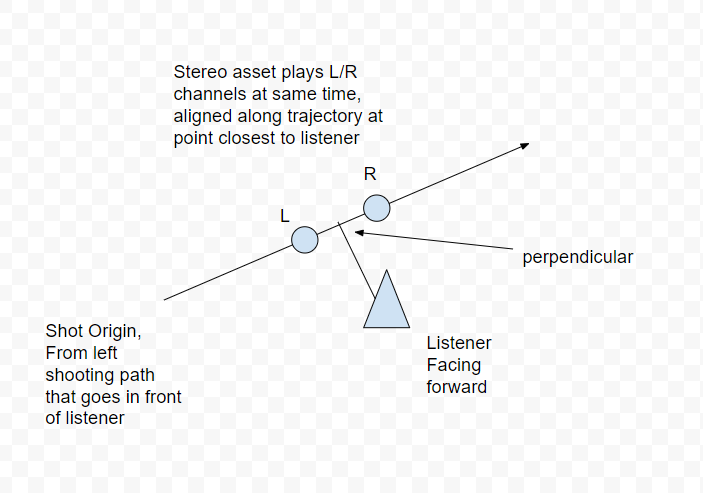

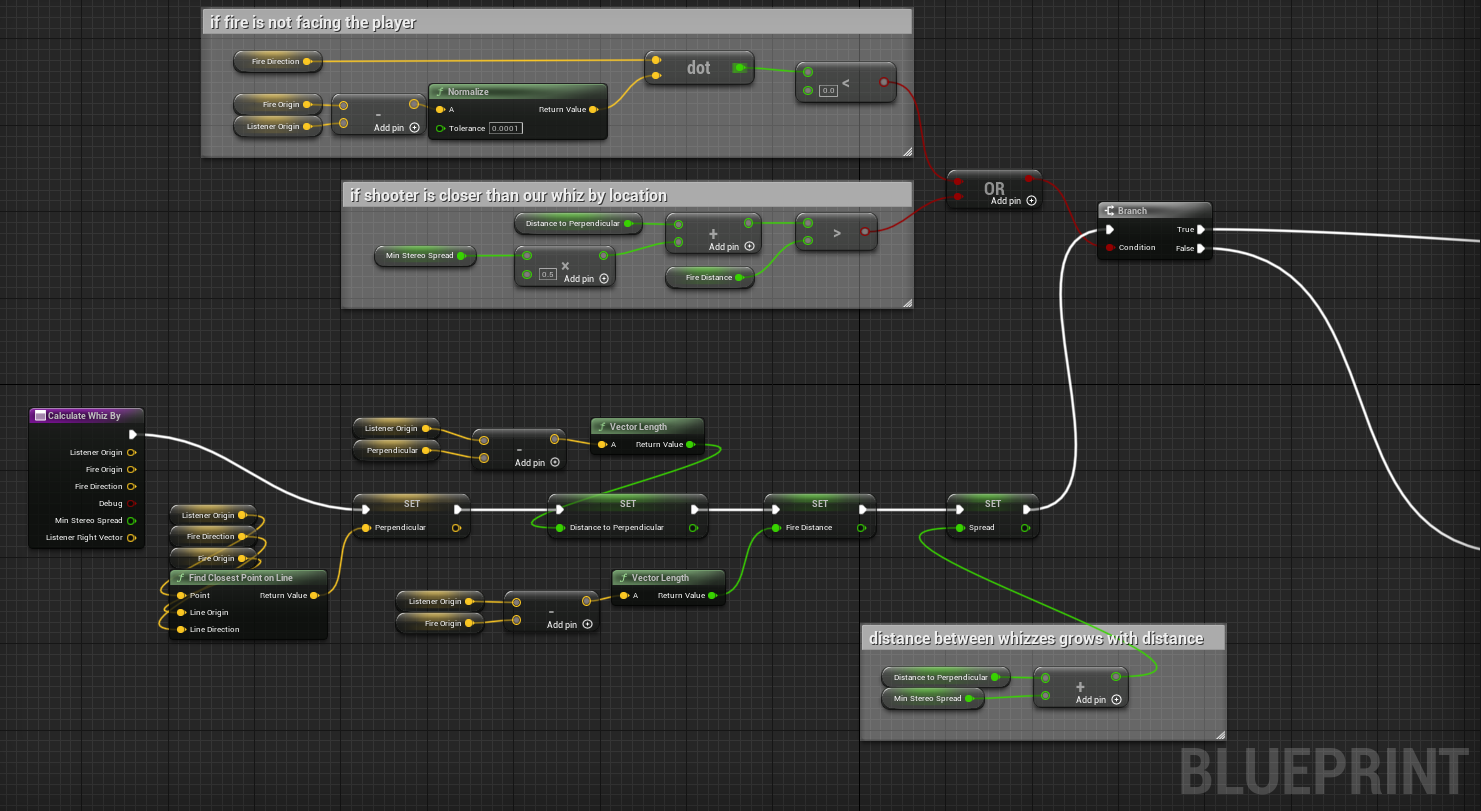

Find the Closest Point

An ideal location for the whiz-by sounds is at the closest point to the listener on the weapon fire vector. This also happens to be the location of the perpendicular. Then we can use the stereo spread attenuation property to place the oncoming sound slightly before the listener and the receding sound slightly after, along that same weapon fire trajectory.

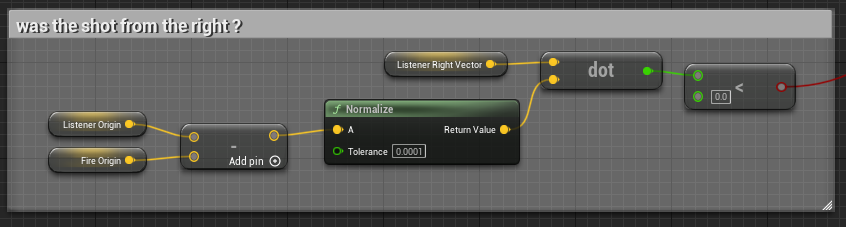

Directionality

One of the key ingredients to make this system work requires determining whether a shot across your bow is coming from the left or the right. Figuring this out requires some math:

Undesirable Conditions

There are circumstances where we don't want to hear a whiz-by. When the projectile is too close, hits the player, or the weapon fire isn't heading towards the player at all, we bypass the system.

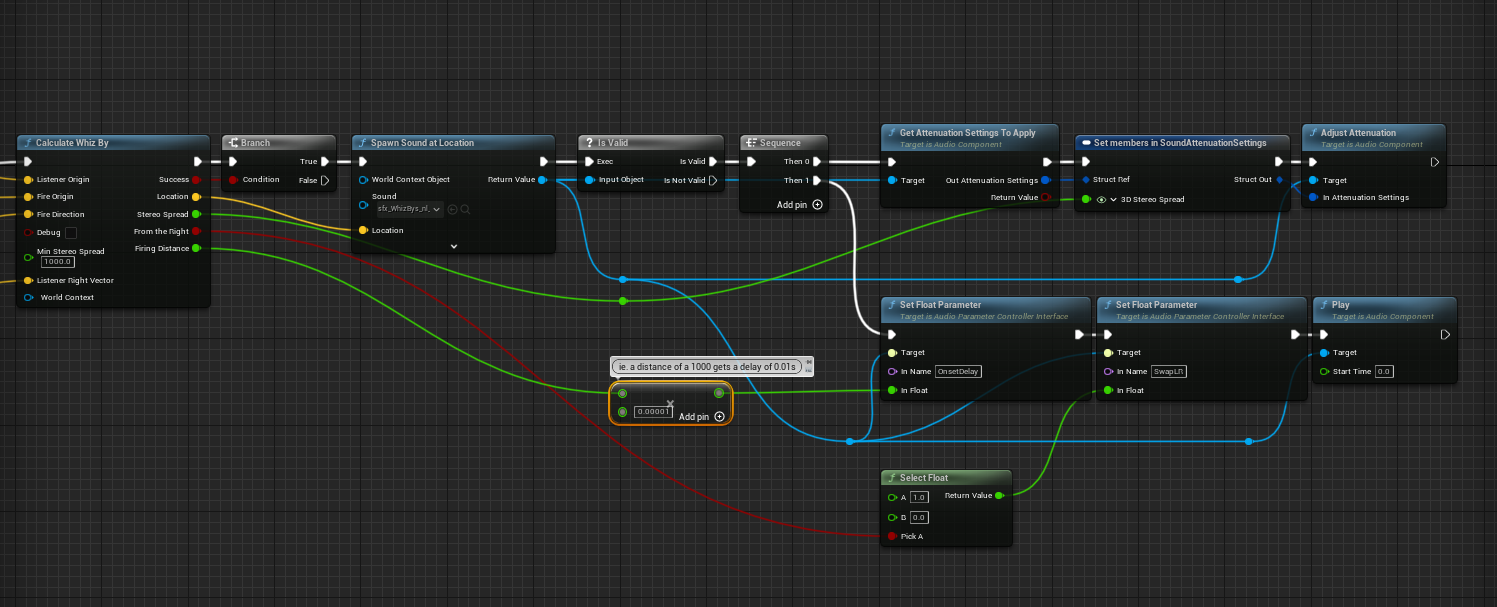

The Speed of Sound

The whiz-by system uses two types of sounds: an oncoming sound moving towards you and a receding sound that has already whizzed past you. The playback of the receding sound is slightly delayed so that you hear it second. Both oncoming and receding sounds are also delayed based on the player's distance. These delays help sell the effect as it mimics the behavior of sound traveling in the real world.

The whiz-by system uses two types of sounds: an oncoming sound moving towards you and a receding sound that has already whizzed past you. The playback of the receding sound is slightly delayed so that you hear it second. Both oncoming and receding sounds are also delayed based on the player's distance. These delays help sell the effect as it mimics the behavior of sound traveling in the real world.

Wind System

When we found out that the Control map for Lyra would be outside, I thought it would be a good idea to build a dynamic wind system. By tracking the position of nearby walls and ceilings, you can create reactive wind sounds. The system also responds to the player's speed, so when in freefall or moving really quickly via a launchpad, you will hear the wind as if it was blowing past the player's ears.

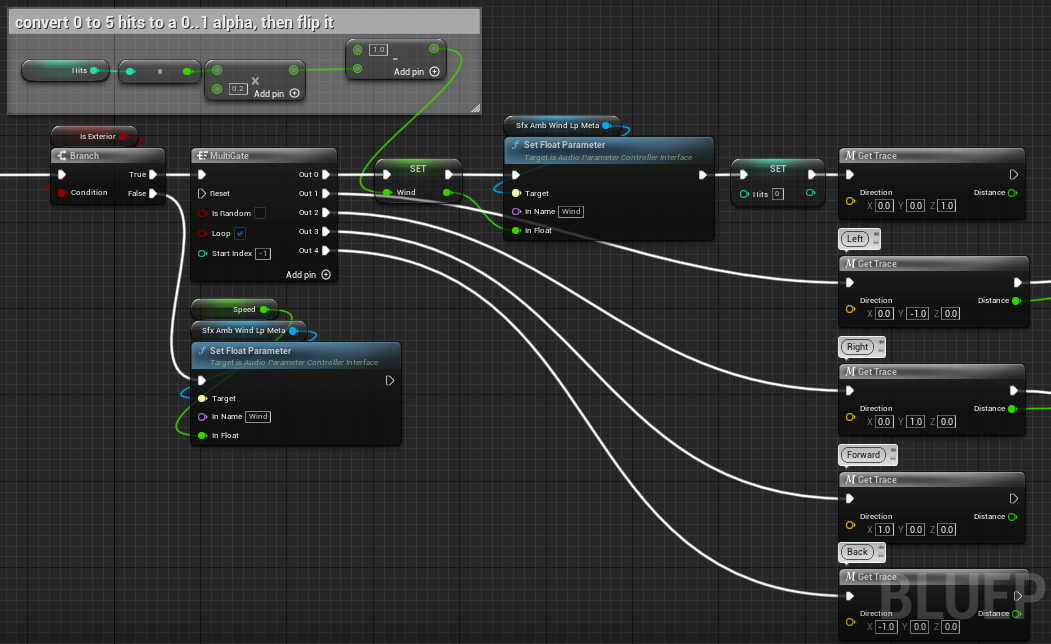

Using Line Traces

The wind system uses an actor blueprint that does one trace per tick. The system also ticks less than every frame (via set tick interval), as it is not necessary to have that level of fidelity. By spreading our trace calculations over multiple frames, it reduces the performance load of the system.

Mapping Geometry to Attenuation

The attenuation of the wind is calculated using five line traces projected outward from the player position: left, forward, right, back, and up. Depending on how many of these five traces hit geometry (i.e., a wall or a ceiling), we get one of five values : 0%, 25%, 50%, 75%, or 100%. The more traces hit something, the less wind we want there to be. On the Metasound side, we also need to interpolate this value, so it doesn't simply step between 0.00, 0.25, 0.50, etc. As a result, we get a smoother value that is more useful for driving the various filters and properties that control the wind. It also allows us to dial in the sound's reactivity. This way, when the player is moving through spaces, the wind doesn't feel too slow or fast to react to environmental changes.

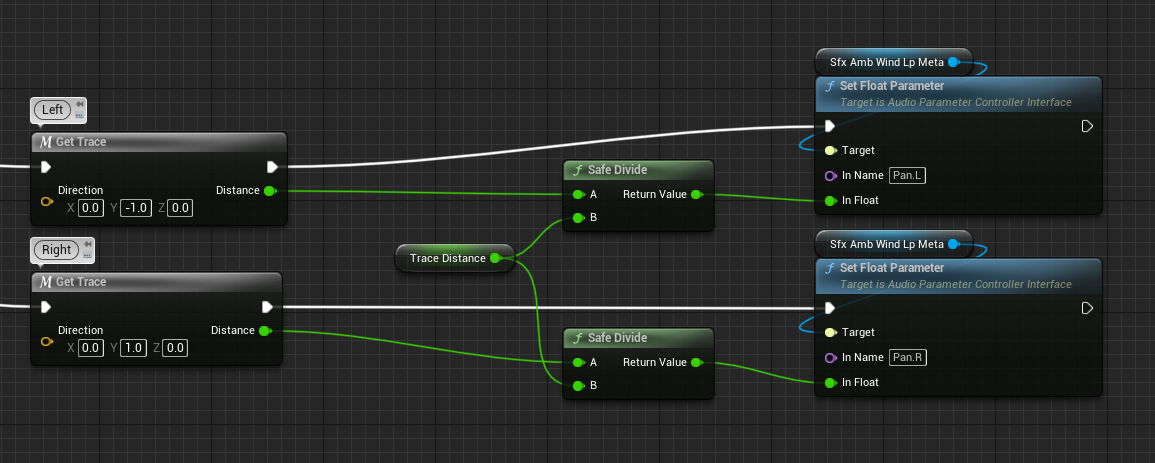

Mapping Geometry to Spatialization

The panning of the wind is calculated using the distance of the left and right-side traces as a ratio. This way, when you're out in an open space, but there's a big wall to your left, the wind will primarily come from the right side of the stereo field. The resulting value is also interpolated in the Metasound Source so that the panning effect is nice and smooth.

The panning of the wind is calculated using the distance of the left and right-side traces as a ratio. This way, when you're out in an open space, but there's a big wall to your left, the wind will primarily come from the right side of the stereo field. The resulting value is also interpolated in the Metasound Source so that the panning effect is nice and smooth.

Speed Modulation

I also decided to have the wind react to the player's movement at some point. You can very easily calculate a player's speed by taking the vector length of its velocity.

The speed is mapped to a 0-1 value, where 0 is equal to the rate the player roughly moves at when running, and 1 is the speed the player roughly moves at when dashing. This value is then passed to the Metasound Source so that the intensity of the wind increases when you move fast. This value is only mildly interpolated so that you still get a quick reaction time; this was necessary to create a feeling less like wind and more like air moving quickly past your head. You can hear this effect clearly when you're in freefall or chaining together dashes and jump pads.

Initially, the wind system was only used for exterior environments such as the Control map. When I added speed modulation, it opened up the possibility of using this system in interior environments. I created an "interior" mode where the system ignores nearby geometry and only reacts to the player's three-dimensional speed. This mode is used for indoor scenarios, like the Elimination map.

Synthesizing Wind

The wind is generated using white noise and two filters in a Metasound Source. The internal parameters of these filters are driven using a sample & hold technique with a noise oscillator to get random, periodic values. These values are then interpolated so that fluctuations over time are buttery smooth. Everything is also modulated by a 0-1 real number (formerly called a float in Unreal) from the WindSystem Blueprint. Incorporating this value into the modulation allows the wind to get louder, deeper, and more energetic as the value approaches 1.0.

Happy noodling!